CountCLIP

Teaching CLIP to Count to Ten

CountCLIP: Teaching CLIP to Count to Ten

CountCLIP is an implementation of Google Research’s paper “Teaching CLIP to Count to Ten” published in ICCV 2023. This project focuses on enhancing Vision-Language Models (VLMs) like CLIP with the ability to accurately count objects in images - a capability that standard CLIP models lack.

The Problem

Large vision-language models like CLIP have revolutionized computer vision by learning robust representations of text and images. However, these models struggle with compositional concepts, particularly counting objects in images. Standard CLIP models are surprisingly insensitive to the number of objects present, making them unreliable for tasks that require quantitative understanding.

Our Approach

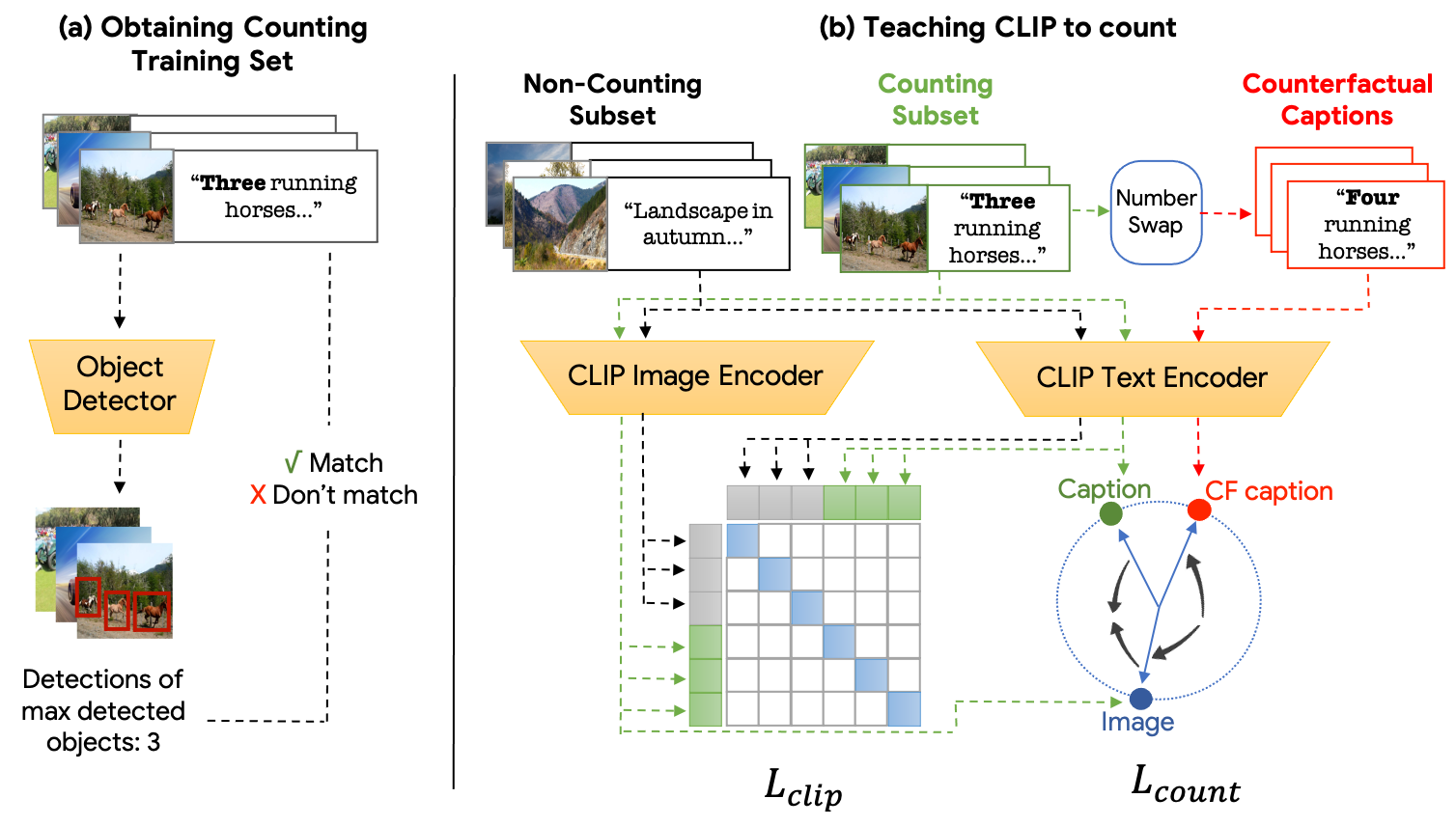

CountCLIP addresses this limitation by fine-tuning CLIP with a specialized counting-contrastive loss function. The key innovation is creating “hard negative” examples by swapping the true object count in image captions with incorrect counts. This teaches the model to discriminate between correct and incorrect object counts while maintaining its general vision-language capabilities.

Dataset Creation

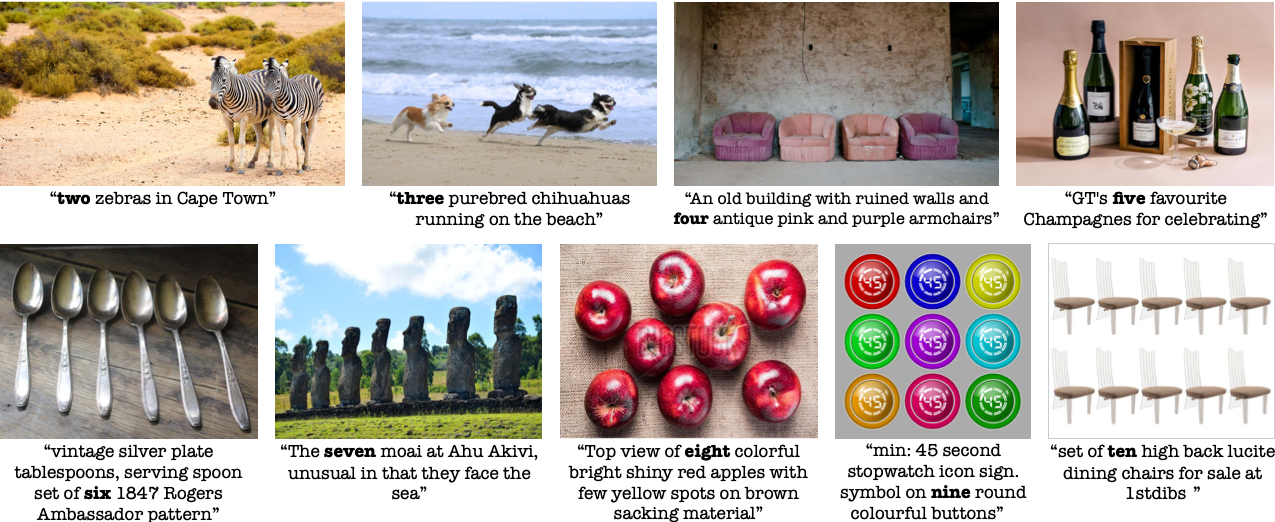

We created a specialized counting dataset by:

- Filtering the LAION-400M dataset for images with captions containing number words (“two” through “ten”)

- Verifying that the images actually contain the stated number of objects

- Creating a balanced dataset with approximately 2,000 counting images and 13,000 non-counting images

This carefully curated dataset enables effective training of the counting capability while preserving CLIP’s general performance.

Results

CountCLIP significantly outperforms standard CLIP models on counting tasks while maintaining performance on standard vision benchmarks. Our implementation demonstrates:

- Improved zero-shot counting accuracy

- Maintained performance on general image classification tasks

- Enhanced capabilities for downstream applications like image retrieval and text-to-image generation

Applications

The counting-aware CLIP model enables several important applications:

- Improved Image Retrieval: More accurate retrieval of images containing specific numbers of objects

- Enhanced Text-to-Image Generation: When used with image generation models, CountCLIP produces more accurate counts of requested objects

- Better Visual Question Answering: More reliable responses to questions about quantities in images

Try It Yourself

We’ve created a Colab demo where you can experiment with CountCLIP’s counting capabilities:

Acknowledgements

This project builds upon work from: