TITANS for Squared Amplitude Calculation

Implementing Google's Titans architecture for particle physics calculations

Project Overview

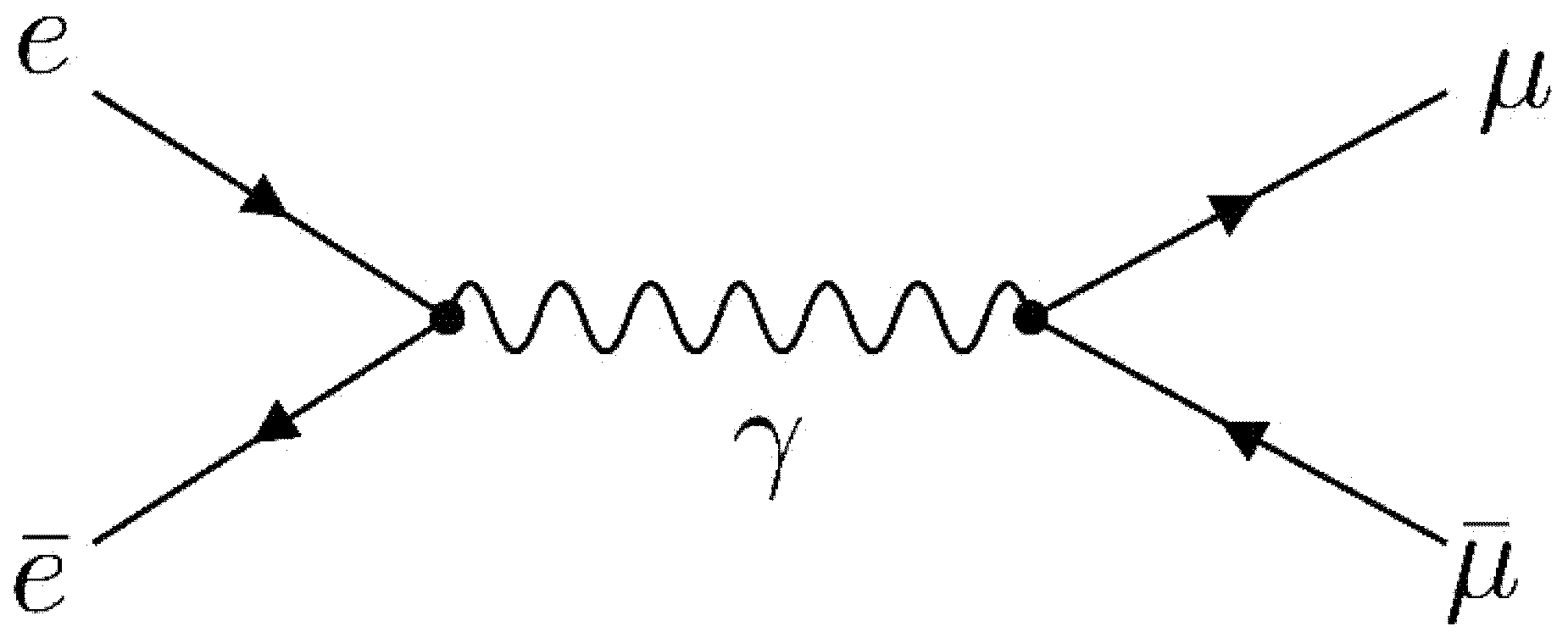

In particle physics, squared amplitudes are crucial for calculating cross-sections, which provide a testable link between theory and experiment. This project explores how modern transformer architectures can learn to map from amplitudes to their squared forms using sequence-to-sequence modeling.

This repository implements and evaluates the Memory As Context (MAC) Transformer architecture from Google’s Titans: Learning to Memorize at Test Time paper for calculating squared amplitudes in particle physics. The project compares the performance of a standard T5 transformer model against the more advanced TITANS architecture on this specialized task.

The TITANS Architecture

The TITANS architecture represents a significant advancement in how AI models handle memory. Unlike traditional transformers that struggle with long-term dependencies, TITANS introduces three distinct memory modules:

Memory Components

-

Core Module: Handles the main data processing, capturing short-term dependencies using attention mechanisms similar to traditional Transformers.

-

Long-term Memory Module: Stores and retrieves historical information, allowing the model to access relevant past data when processing new inputs.

-

Persistent Memory Module: Contains learnable, data-independent parameters that capture task-specific knowledge, providing a stable foundation for processing.

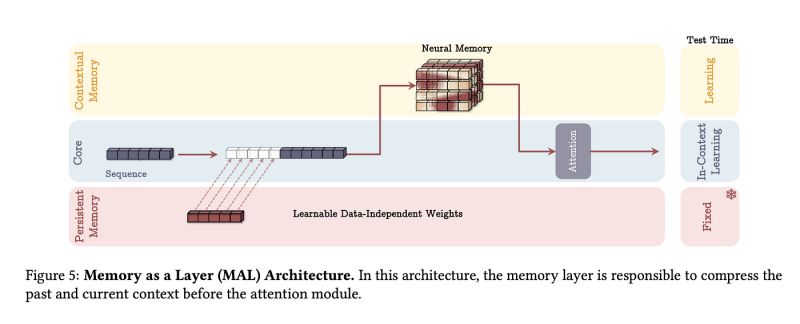

For our implementation, we focused on the Memory as Context (MAC) variant, which treats memory as contextual information for current processing. This approach has shown particular promise for complex sequence transformation tasks like our squared amplitude calculations.

Dataset and Preprocessing

The dataset consists of particle physics expressions in the format:

"event type : Feynman diagram : amplitude : squared amplitude"

Index Normalization

One of the key preprocessing steps involves normalizing indices in the physics expressions to reduce vocabulary size and improve model training efficiency. We use regular expressions to identify and standardize different types of indices:

- Momentum indices: Patterns like

i_36289,j_5,k_3, normalized to sequential small indices. - Variable indices: Expressions like

%sigma_157721or%gam_166722, excluding momentum indices. - Compound indices: Complex identifiers with multiple parts like

gamma_gam_166722.

This normalization process maintains consistency across expressions while significantly reducing the complexity of the input space.

Models Implemented

1. T5 Transformer (Baseline)

We implemented a standard encoder-decoder transformer model using Google’s T5 Small architecture as our baseline. This well-established model provides a good reference point for evaluating the performance of our TITANS implementation.

2. MAC TITANS Model

Our implementation of the Memory As Context Transformer architecture includes:

- Neural memory components for enhanced pattern recognition

- Segmented attention for handling long sequences

- Adaptive memory updates for learning complex transformations

Results

The results demonstrate a significant performance advantage for the TITANS architecture over the baseline T5 model in this specialized physics task.

Performance Comparison

| Model | Test Sequence Accuracy | Test Token Accuracy |

|---|---|---|

| T5 Transformer | 5.14% | 33.66% |

| MAC TITANS | 40.23% | 60.28% |

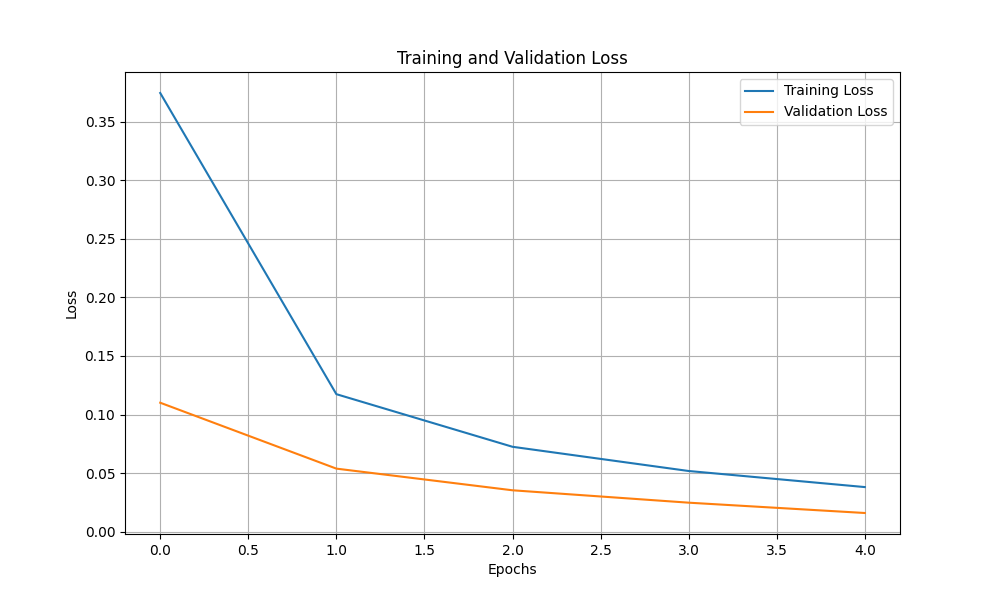

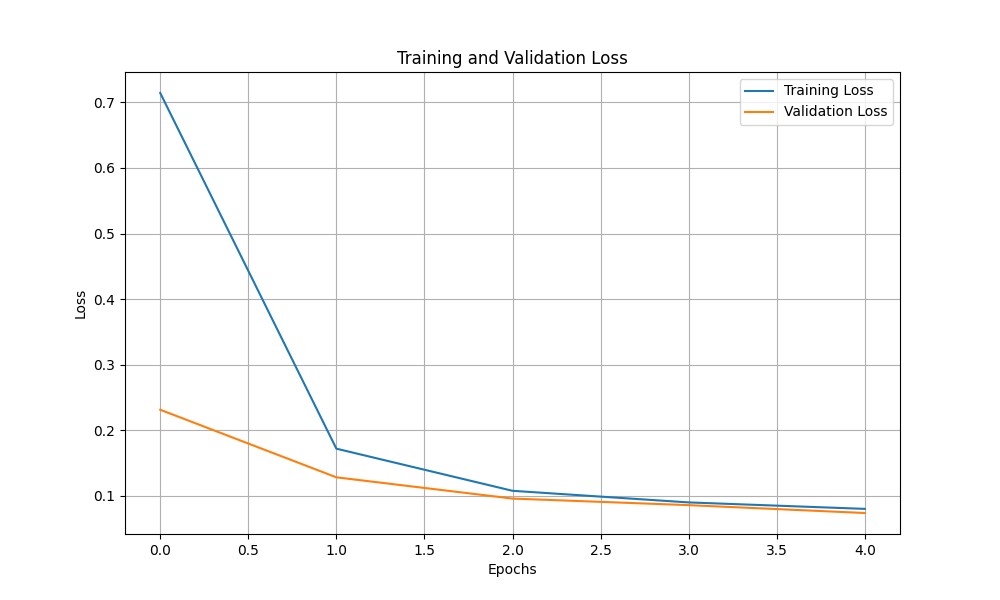

Training Curves

The training curves reveal that the TITANS model not only achieves better final performance but also demonstrates more efficient learning, with faster convergence and more stable training dynamics.

Future Work

Future directions for this project include:

- Scaling and tuning the model to handle more complex physics expressions

- Exploring other TITANS variants (Memory as Gate, Memory as Layer) for this task

- Extending the approach to other datasets problems

Acknowledgements

This code is adapted from the following sources:

- TITANS-PyTorch by lucidrains: https://github.com/lucidrains/titans-pytorch

- Skanformer by Riteshbhalerao11: https://github.com/Riteshbhalerao11/Skanformer

The project was developed as part of the Google Summer of Code application for the “TITANS for squared amplitude calculation” project.